If I show you single picture of a room, you can tell me right away that there’s a table with a chair in front of it, they’re probably about the same size, about this far from each other, with the walls this far away — enough to draw a rough map of the room. Computer vision systems don’t have this intuitive understanding of space, but the latest research from DeepMind brings them closer than ever before.

The new paper from the Google -owned research outfit was published today in the journal Science (complete with news item). It details a system whereby a neural network, knowing practically nothing, can look at one or two static 2D images of a scene and reconstruct a reasonably accurate 3D representation of it. We’re not talking about going from snapshots to full 3D images (Facebook’s working on that) but rather replicating the intuitive and space-conscious way that all humans view and analyze the world.

When I say it knows practically nothing, I don’t mean it’s just some standard machine learning system. But most computer vision algorithms work via what’s called supervised learning, in which they ingest a great deal of data that’s been labeled by humans with the correct answers — for example, images with everything in them outlined and named.

This new system, on the other hand, has no such knowledge to draw on. It works entirely independently of any ideas of how to see the world as we do, like how objects’ colors change towards their edges, how they get bigger and smaller as their distance changes, and so on.

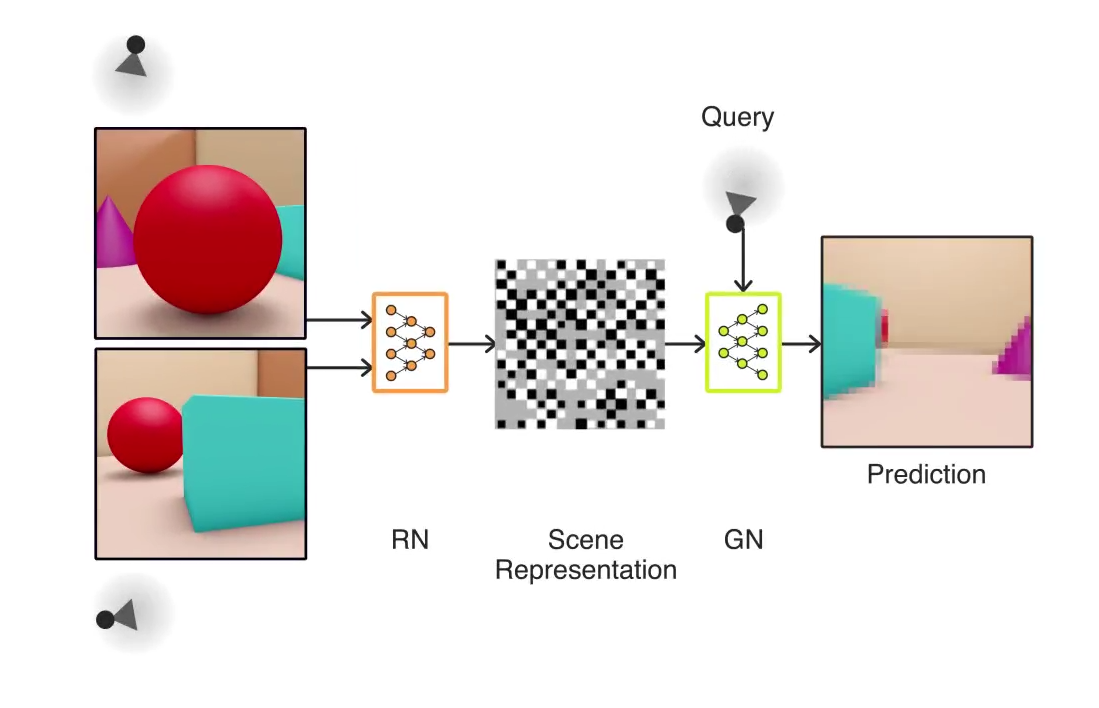

It works, roughly speaking, like this. One half of the system is its “representation” part, which can observe a given 3D scene from some angle, encoding it in a complex mathematical form called a vector. Then there’s the “generative” part, which, based only on the vectors created earlier, predicts what a different part of the scene would look like.

(A video showing a bit more of how this works is available here.)

Think of it like someone hand you a couple pictures of a room, then asking you to draw what you’d see if you were standing in a specific spot in it. Again, this is simple enough for us, but computers have no natural ability to do it; their sense of sight, if we can call it that, is extremely rudimentary and literal, and of course machines lack imagination.

Yet there are few better words that describe the ability to say what’s behind something when you can’t see it.

“It was not at all clear that a neural network could ever learn to create images in such a precise and controlled manner,” said lead author of the paper, Ali Eslami, in a release accompanying the paper. “However we found that sufficiently deep networks can learn about perspective, occlusion and lighting, without any human engineering. This was a super surprising finding.”

It also allows the system to accurately recreate a 3D object from a single viewpoint, such as the blocks shown here:

I’m not sure I could do that.

Obviously there’s nothing in any single observation to tell the system that some part of the blocks extends forever away from the camera. But it creates a plausible version of the block structure regardless that is accurate in every way. Adding one or two more observations requires the system to rectify multiple views, but results in an even better representation.

This kind of ability is critical for robots especially because they have to navigate the real world by sensing it and reacting to what they see. With limited information, such as some important clue that’s temporarily hidden from view, they can freeze up or make illogical choices. But with something like this in their robotic brains, they could make reasonable assumptions about, say, the layout of a room without having to ground-truth every inch.

“Although we need more data and faster hardware before we can deploy this new type of system in the real world,” Eslami said, “it takes us one step closer to understanding how we may build agents that learn by themselves.”